o3 competitor: GLM 4.5 by Zhipu AI

- hybrid reasoning model (on by default)

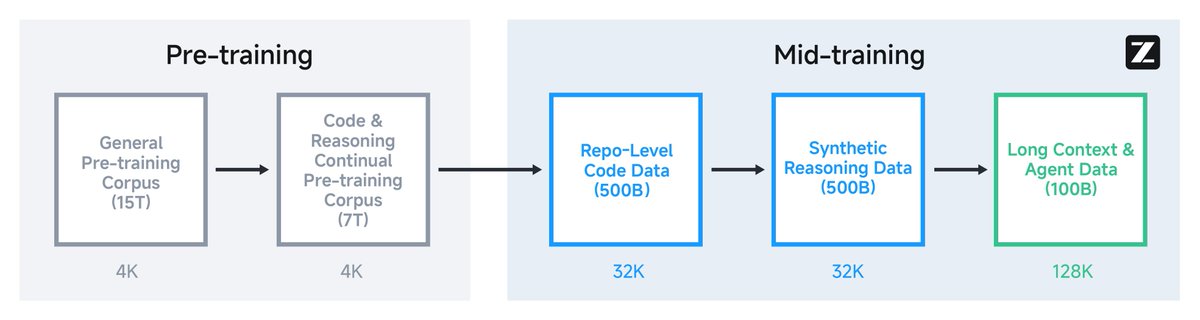

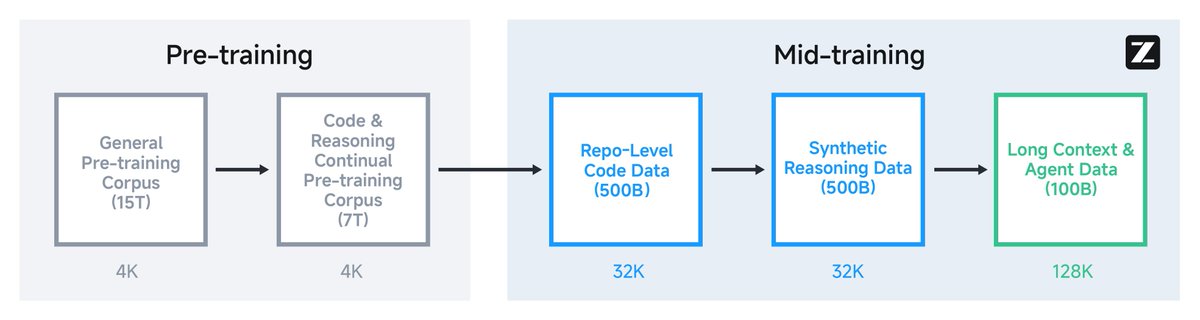

- trained on 15T tokens

- 128k context, 96k output tokens

- $0.11 / 1M tokens

- MoE: 355B A32B and 106B A12B

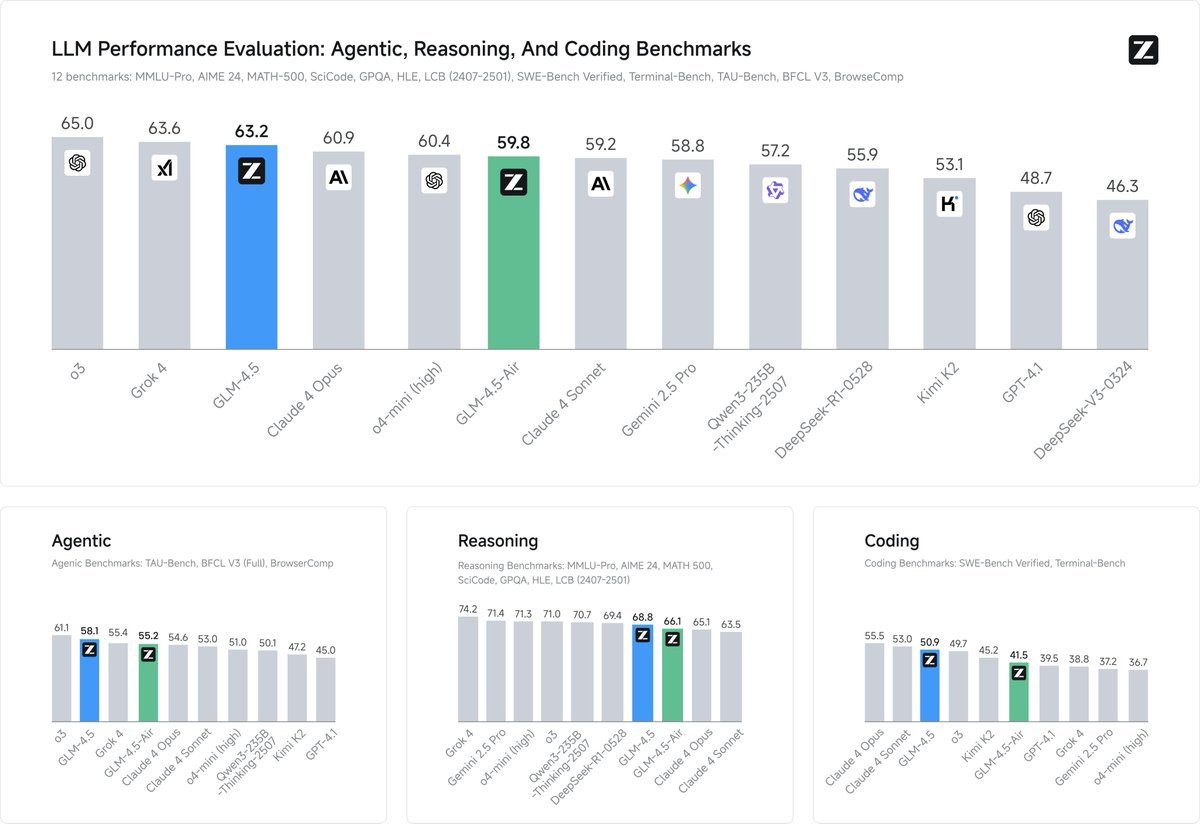

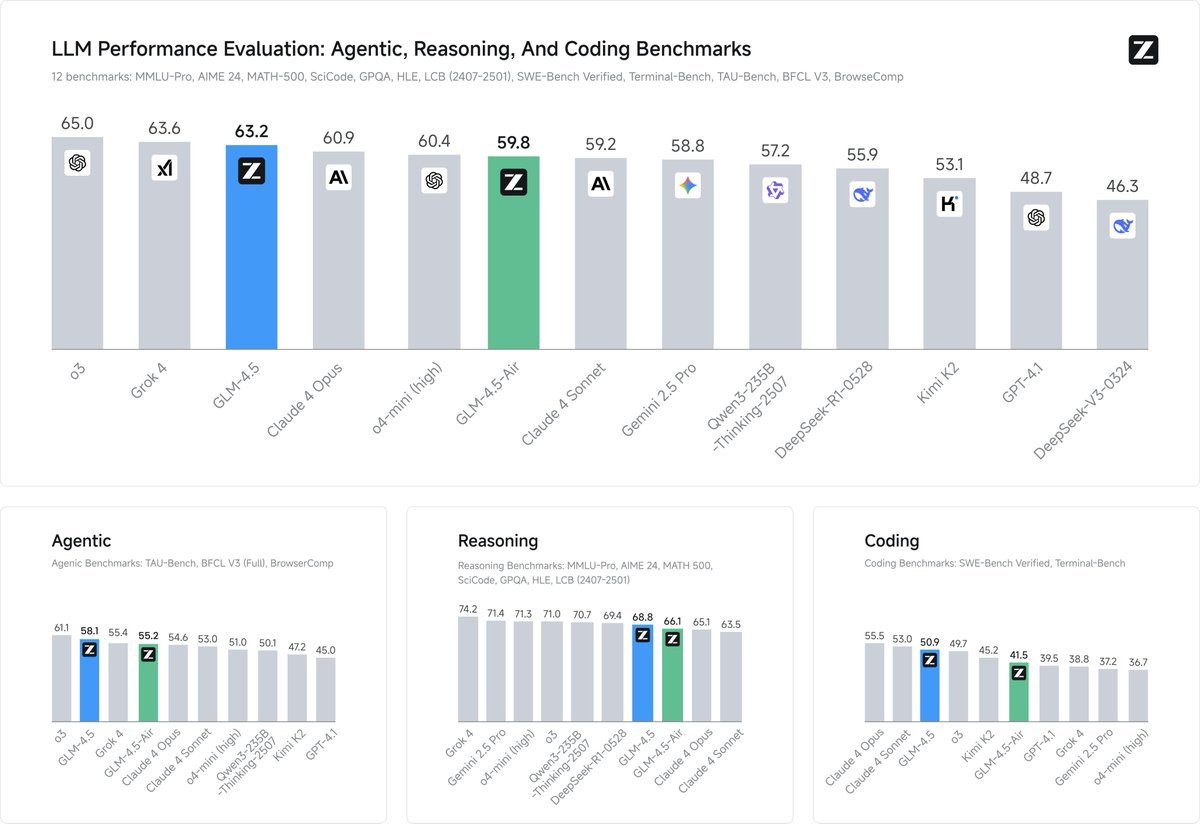

Benchmark details:

- tool calling: 90.6% success rate vs Sonnet’s 89.5% vs Kimi K2 86.2%

- coding: 40.4% win rate vs Sonnet, 53.9% vs Kimi K2, 80.8% vs Qwen3 Coder

Models: https://t.co/lBho4dbEha

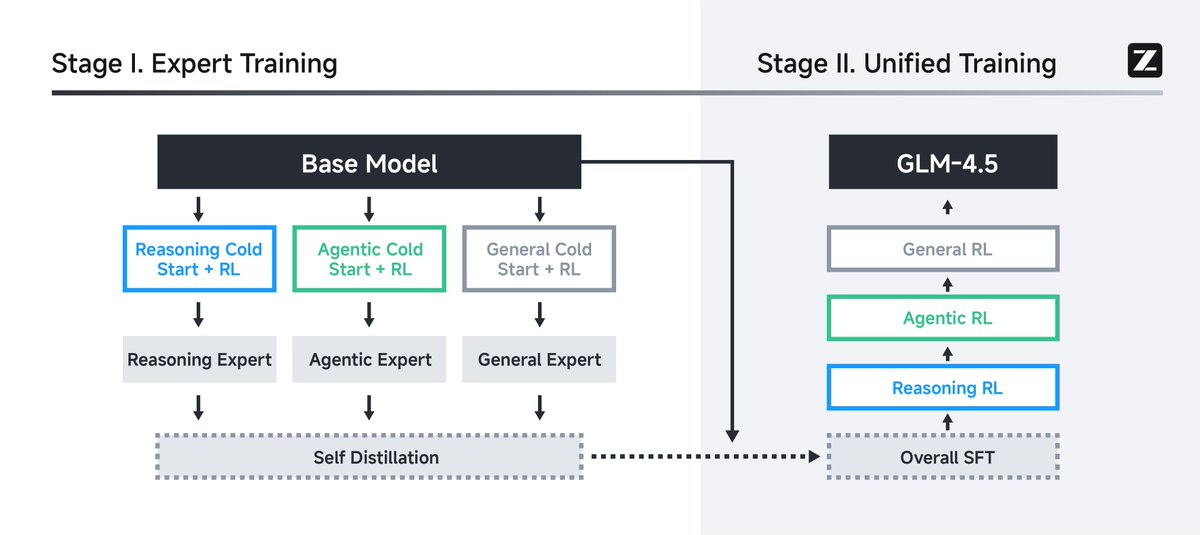

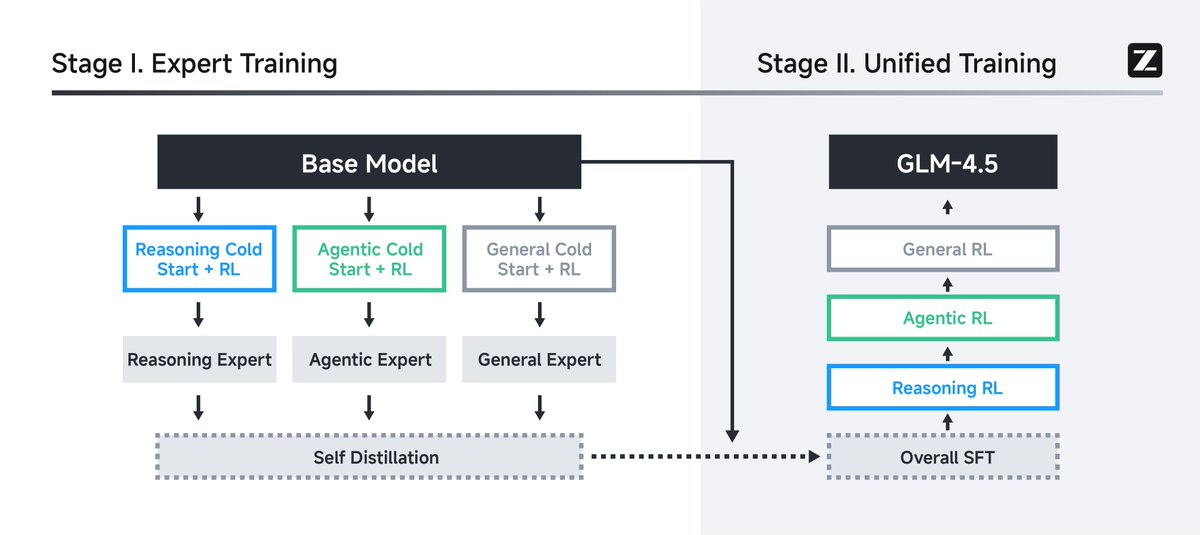

Zhipu AI has also released their entire post-training infrastructure: https://t.co/VvYMfqbDJo https://t.co/ZV0ePLjuxW

Slight correction to the post:

- it was not just 15T tokens

- it was 23.1T tokens total! https://t.co/M56HlhfFPQ

For those wondering how to get GLM 4.5 cheaply: you need to use their Mainland China API.

https://t.co/CphUf9FdiZ

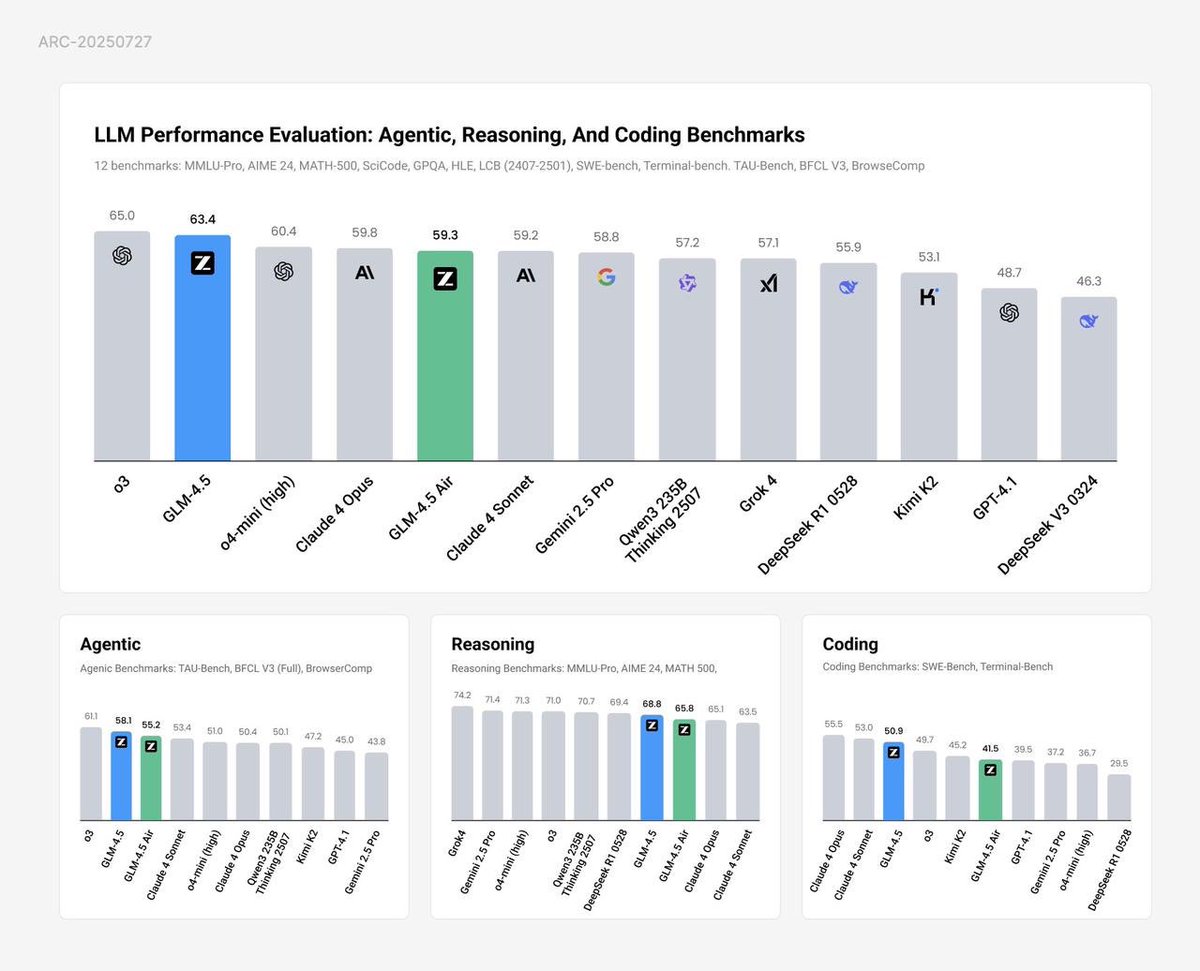

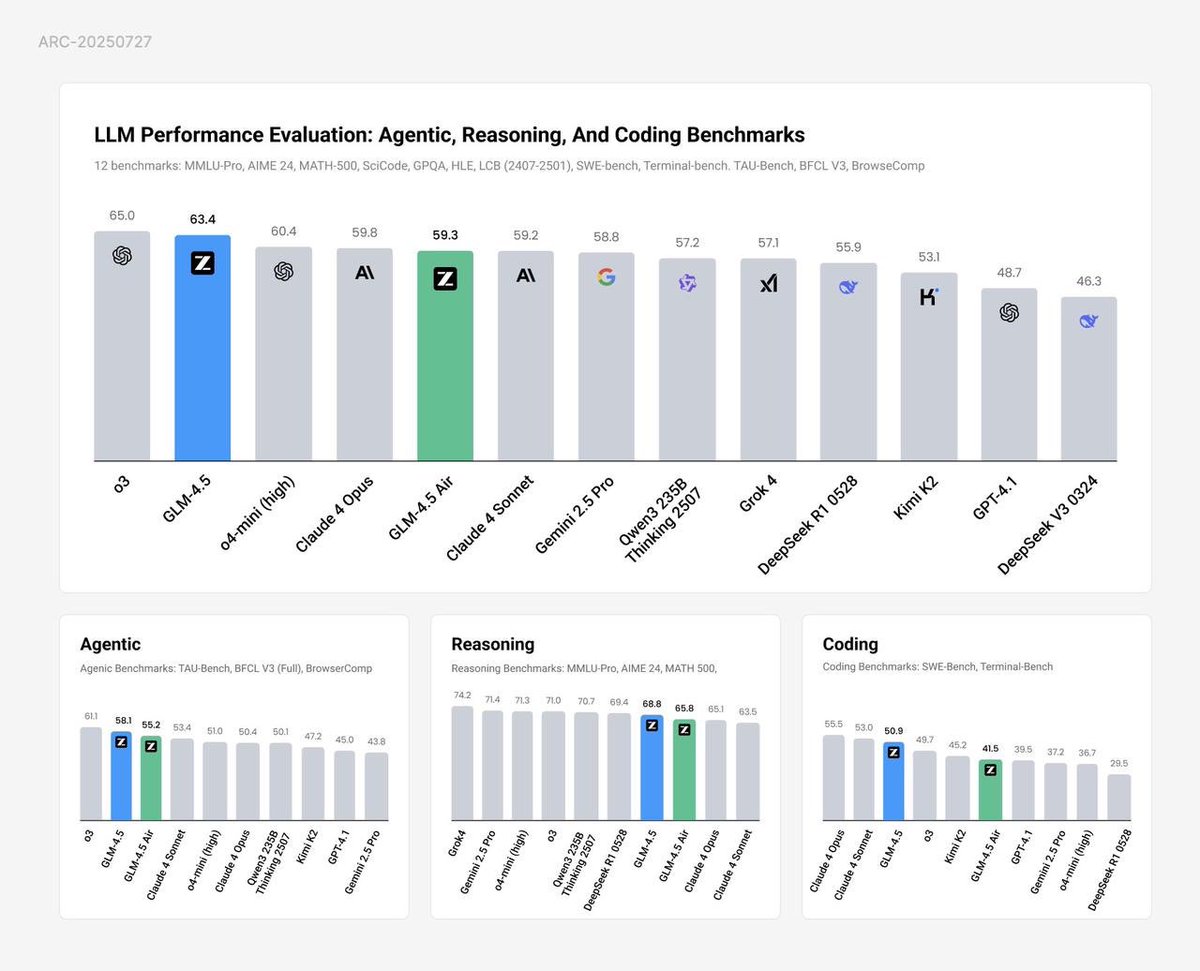

Update: Zhipu AI says the initial benchmark I posted are not up-to-date, so here is the updated version.

Note that I found the original benchmark comparison on their bigmodel documentation. https://t.co/E0PBaF9Kti