An advanced version of Gemini with Deep Think has officially achieved gold medal-level performance at the International Mathematical Olympiad. 🥇

It solved 5️⃣ out of 6️⃣ exceptionally difficult problems, involving algebra, combinatorics, geometry and number theory. Here’s how 🧵

In a significant advance over our results with AI last year, Gemini was given the same problem statements and time limit - 4.5 hours - as human competitors, and still produced rigorous mathematical proofs.

It gained 35 points out of a total 42 - equivalent to earning a gold medal.

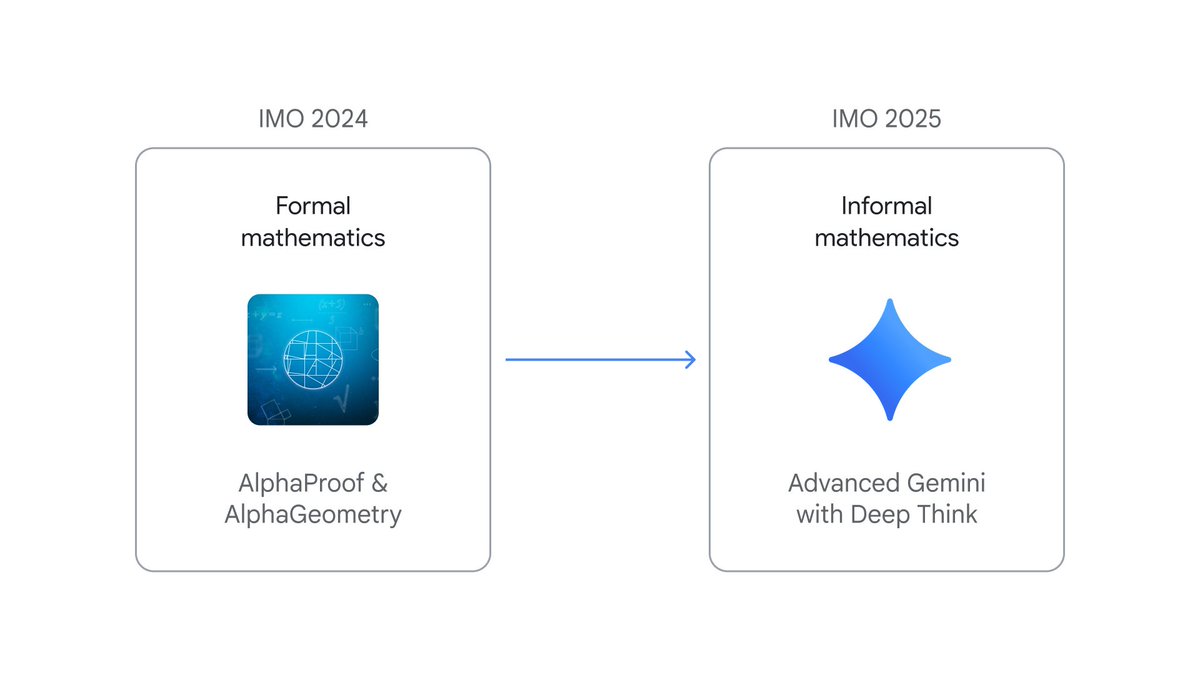

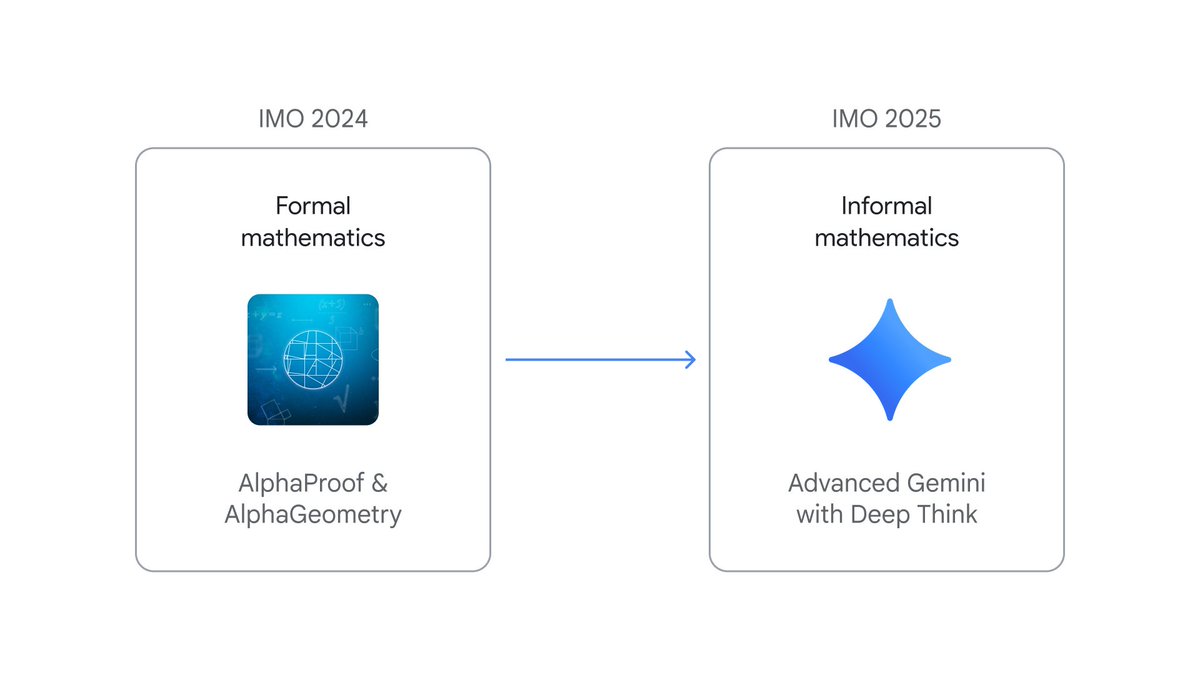

Gemini solved the math problems end-to-end in natural language (English).

This differs from our results last year when experts first translated them into formal languages like Lean for specialized systems to tackle.

With Deep Think, an enhanced reasoning mode, our model could simultaneously explore and combine multiple possible solutions before giving definitive answers.

We also trained it on RL techniques that use more multi-step reasoning, problem-solving and theorem-proving data.

Finally, we pushed this version of Gemini further by giving it:

🔘 More thinking time

🔘 Access to a set of high-quality solutions to previous problems

🔘 General hints and tips on how to approach IMO problems

These results show we're closer to building systems that can solve more complex mathematics.

A version of this model with Deep Think will soon be available to trusted testers, before rolling out to @Google AI Ultra subscribers.

Find out more ↓ https://t.co/U1n7tC5gg1